Two Methods to Scan for PII in Data Warehouses

Find PII data by scanning column names and/or data in your data warehouse

An important requirement for data privacy and protection is to find and catalog tables and columns that contain PII or PHI data in a data warehouse. Open-source data catalogs like Datahub and Amundsen enable the cataloging of information in data warehouses. Moreover, tables and columns can be tagged including PII and type of PII tags.

The missing piece is to scan, detect and tag tables and columns with PII.

This post describes two strategies to scan and detect PII as well as introduce an open-source application PIICatcher that can be used to scan data warehouses.

What is PII data?

PII or Personally Identifiable Information is generally defined as any piece of information that can be used to identify an individual. Traditionally, information such as SSN, mailing, email or phone numbers are considered PII. As technology has evolved, the scope of PII has increased to include login IDs, IP addresses, geolocation and biometric data.

There are different types of PII data:

Sensitive: is any data that can be used to directly link to an individual such as name, phone numbers, email and mailing address.

Non-Sensitive: is any data that can be used to indirectly linked to an individual such as location and race.

Specifically, PII as defined by Compliance laws are:

GDPR: PII is any data that can be used to clearly identify an individual. This also includes IP addresses, login ID details, social media posts, digital images, geolocation and more.

CCPA: Personal information is defined as information that identifies, relates to, describes, is reasonably capable of being associated with, or could reasonably be linked, directly or indirectly, with a particular consumer or household.

HIPAA: HIPAA also defines PII as any type of information that relates directly or indirectly to an individual.

Beyond the above definition, domains and businesses may have specific PII data collected by them. A simple example is PHI (Personal Health Information) collected by the health industry. Similarly, bank account or crypto-currency wallet IDs can also be used to identify individuals.

The following list can be considered as basic or common PII information that all industries need to manage:

Phone

Email

Credit Card

Address

Person/Name

Location

Date

Gender

Nationality

IP Address

SSN

User Name

Password

Challenges

An example record in the patients table in Synthetic Patient Records with COVID-19 is:

|Column Name|Data|

|-----------|----|

|Id|f0f3bc8d-ef38-49ce-a2bd-dfdda982b271|

|BIRTHDATE|2017-08-24|

|SSN|999-68-6630|

|FIRST|Jacinto644|

|LAST|Kris249|

|RACE|white|

|ETHNICITY|nonhispanic|

|GENDER|M|

|BIRTHPLACE|Beverly Massachusetts US|

|ADDRESS|888 Hickle Ferry Suite 38|

|CITY|Springfield|

|STATE|Massachusetts|

|COUNTY|Hampden County|

|ZIP|01106|

|LAT|42.151961474963535|

|LON|-72.59895940376188|

|HEALTHCARE_EXPENSES|8446.49|

|HEALTHCARE_COVERAGE|1499.08|

Note that most of the columns store PII data. However, it can be confusing to detect if a column stores PII data and the type of PII data. For example, if the scanner only scans the data in SSN then it may detect it as a phone number. Similarly, M or F in the GENDER column or white in RACE column, do not provide enough context to detect if it is PII and the type of PII data.In both these cases, it is easier to scan the column names.

Conversely, the payers table stores the name of health insurance companies in the NAME column. In this case, the scanner has to check the data to detect that the NAME column does not contain PII data.

Techniques to scan and detect PII data

Based on the previous section, the two main strategies to scan for PII data are:

Scan column and table names

Scan data stored in columns

Scan Data Warehouse Metadata

Data engineers use descriptive names for tables and columns to help users understand the data stored in them. Therefore, the names of tables and columns provide clues to the type of data stored. For example,

first_name, last_name, full_name, or name may be used to store the name of a person.

ssn or social_security may be used to store US SSN numbers.

phone or phone_number maybe used to store phone numbers.

All data warehouses provide an information schema to extract schema, table and column information. For example, the following query can be used to get metadata from Snowflake:

SELECT

lower(c.column_name) AS col_name,

c.comment AS col_description,

lower(c.data_type) AS col_type,

lower(c.ordinal_position) AS col_sort_order,

lower(c.table_catalog) AS database,

lower({cluster_source}) AS cluster,

lower(c.table_schema) AS schema,

lower(c.table_name) AS name,

t.comment AS description,

decode(lower(t.table_type), 'view', 'true', 'false') AS is_view

FROM

{database}.{schema}.COLUMNS AS c

LEFT JOIN

{database}.{schema}.TABLES t

ON c.TABLE_NAME = t.TABLE_NAME

AND c.TABLE_SCHEMA = t.TABLE_SCHEMA

Regular expressions can be used to match table or column names. For example, the regular expression below detects a column that stores social security numbers:

^.*(ssn|social).*$ Scan data stored in columns

The second strategy is to scan the data stored in columns. Within this strategy the two sub-strategies are:

Regular Expressions

NLP libraries such as Stanford NER Detector and Spacy

The major disadvantage of this strategy is that NLP libraries are compute intensive. It can be prohibitively expensive to run NLP scanners even on moderately sized tables let alone tables of millions or billions of rows. Therefore, a random sample of rows should be scanned. Choosing a random sample is harder than expected. Luckily, a few databases provide builtin functions to choose a random sample. For example, the Snowflake query below provides a random sample:

select {column_list} from {schema_name}.{table_name} TABLESAMPLE BERNOULLI (10 ROWS)Once the rows have been extracted, then can be processed using regular expressions or NLP libraries to detect PII content.

Breaking Ties

As explained in Challenges section, both techniques are required to detect PII data. However both techniques are susceptible to false positives and negatives. More often than not, different techniques suggest conflicting PII types. Detecting the right type is hard and the subject of a future blog post.

PIICatcher: Scan data warehouses for PII data

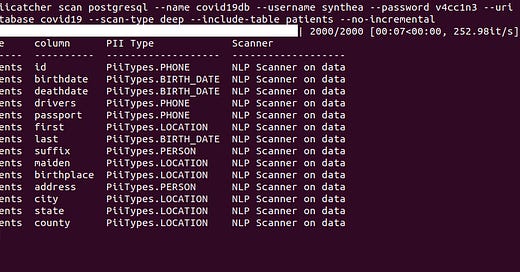

PIICatcher implements both the strategies to scan and detect PII data in the data warehouses.

Features

A data warehouse can be scanned using either strategies. PIICatcher is *battery-included* with a growing set of regular expressions for scanning column names as well as data. It also include Spacy. PIICatcher supports incremental scans and will only scan new or not-yet scanned columns. Incremental scans allow easy scheduling of scans. It also provides powerful options to include or exclude schema and tables to manage compute resources. There are ingestion functions for both Datahub and Amundsen which will tag columns and tables with PII and the type of PII tags.

Check out AWS Glue & Lake Formation Privilege Analyzer for an example of how PIIcatcher is used in production.

Conclusion

Column names and data can be scanned to detect PII in databases. Both strategies are required to reliably detect PII data. PIICatcher is an open source application that implements both these strategies. It can tag datasets with PII and the type of PII to enable data admins to take more informed decisions on data privacy and security.